Siguiendo con la serie sobre Chatbots, he preparado un programilla para que Google Assistant te permita navegar usando la voz por Hacker News (el foro de Y Combinator dedicado a noticias de tecnología) y escuchar el cuerpo de las noticias que más te interesen.

[In English]

Si sólo quieres usarla basta con que le digas a Google «Talk to Hacker News Reader«. Sin embargo, si quieres conocer los puntos clave para poder hacer algo similar en pocas horas y con muy poco código sigue leyendo, porque vamos a ver 6 características humanas muy fáciles de obtener gracias a Dialogflow.

Dialogflow es una plataforma de Google (antes conocida como API.ai), que nos permite diseñar chatbots de un modo sencillo.

Es tan potente, que se pueden hacer muchísimas cosas sin tirar una sóla línea de código.

Por ejemplo, tiene integrado un sistema de reconocimiento de lenguaje natural el que, dándole unos cuantos ejemplos para que entrene, será capaz de reconocer lo que quieren decir los usuarios de nuestra acción y conducirles por las partes de nuestro chatbot para que obtengan la respuesta adecuada.

Por tanto, nos permitirá dar a nuestro chatbot de capacidades «humanas» de un modo muy simple.

1. Escucha

Los intents son el componente principal de un chatbot en Dialogflow. Lo podemos ver como cada unidad de conversación, es cada parte que nuestro sistema va a ser capaz de comprender y dar una respuesta.

Dialogflow nos permite indicar eventos y otras cosas que lanzarán ese intent, y en especial, nos permite indicar distintas frases que le sirvan de guía al chatbot, para que cuando las detecte sepa qué es ese y no otro el que tiene que lanzar.

También permite indicar directamente respuestas ahí mismo, que se irán lanzando aleatoriamente sin que tú tengas que programar nada.

2. Entendimiento

Que el chatbot pueda, sin que programemos nada, distinguir unas frases de otras es genial, pero le falta algo de poder. Al final, no sólo es importante escuchar las frases si no que también hay que entender los conceptos que están encerrados en ellas.

Al introducir las frases de ejemplo, tenemos la posibilidad de seleccionar partes del texto para decirle que es algo importante que debería abstraer y entender más allá de la muestra concreta.

Cuando el motor de reconocimiento del lenguaje entienda alguna de las entidades que hayamos mapeado a variables, las extraerá y nos las pasará como parámetros en las peticiones que nos diga que tenemos que procesar.

El sistema viene ya preparado para entender muchas cosas por defecto, pero nos da la libertad de definir nuestras propias entidades que nos ayuden a modelar exactamente lo que queremos. Por ejemplo, yo me he creado una entidad con los distintos apartados de noticias de Hacker News que se pueden leer: top, new, best, ask, show y job. Así el sistema puede entender que un usuario quiere que le lean los trabajos subidos a la plataforma o las últimas noticias.

3. Inteligencia

Cuando las opciones de respuesta de los intents no son suficientes, podemos crear nuestro propio servicio web que conteste a las peticiones que haya entendido el sistema.

Con las librerías que ofrece Google es fácil montar un servicio en cualquier lenguaje y plataforma. Sin embargo, para cosas pequeñas como el Hacker News Reader, nos permite codificar directamente sobre la plataforma código en node.js que será desplegado de manera transparente para nosotros en Firebase.

Cuando penséis en las cosas que podéis hacer, daros cuenta de que tirando de un servicio (en Firebase o dónde queráis) no estaréis ejecutando código en cliente, por lo que podéis hacer literalmente todo.

Por ejemplo, no os tenéis que ceñir a usar APIs para acceder a contenidos, pues no hay restricciones de cross origin que se apliquen a vuestro código. Tenéis todo Internet a vuestro alcance de un modo sencillísimo.

Mi acción permite al usuario escuchar las noticias que están linkadas desde Hacker News. Para esto se descarga la web (como si fuese un navegador) y la procesa para extraer el contenido (no me he esmerado mucho y se podría hacer mucho mejor).

4. Análisis

Para usar el editor inline, tendremos que tener algunas restricciones como que por narices nuestra función deberá llamarse «dialogflowFirebaseFulfillment» si queremos que se despliegue automáticamente y funcione todo bien.

Sin embargo, gracias a que Dialogflow escucha y entiende, al dotar de inteligencia a nuestro chatbot lo tendremos muy fácil para que sea capaz de realizar los análisis pertinentes de cada petición del usuario.

En el código podremos de un modo sencillo mapear cada uno de los intents que hayamos creado con funciones nuestras. Como estos se encargaban de escuchar, nos indicarán lo que el usuario quiere.

También podremos acceder a los parámetros que el sistema haya entendido gracias a las entidades que hayamos creado (entender).

exports.dialogflowFirebaseFulfillment = functions.https.onRequest((request, response) => {

const agent = new WebhookClient({

request,

response

});

//...

function read(agent) {

var number = agent.parameters.number || 0;

//...

}

let intentMap = new Map();

intentMap.set('Default Welcome Intent', welcome);

intentMap.set('Repeat', repeat);

intentMap.set('Back', repeat);

intentMap.set('Read', read);

//...

var promise = null;

try {

promise = agent.handleRequest(intentMap).then(function() {

log('all done');

}).catch(manageError);

} catch (e) {

manageError(e);

}

return promise;

});

5. Respuesta

Para que nuestro chatbot conteste, tan solo tenemos que hacer uso del método add de nuestro WebhookClient. Podremos pasarle directamente texto, sugerencias de respuesta para guiar al usuario o fichas con texto enriquecido en dónde podremos embeber imágenes, poner emoticonos, etc.

Hay que tener en cuenta que algunos de los dispositivos en los que potencialmente correrá nuestra aplicación pueden que no tengan pantalla o navegador web por ejemplo. Por lo que hay que tener en cuenta que, si queremos algo puramente conversacional, deberíamos evitar los estímulos visuales y ayudar a nuestro bot a expresarse sólo con el uso de la palabra.

6. Recuerdo

Una de las cosas que más nos exasperan a todos es tener que repetir las cosas una y otra vez, por lo que es importante que nuestro bot recuerde lo que ya se le haya dicho.

Para esto usaremos los contextos. Los contextos es una estructura que maneja Dialogflow para ayudarnos a filtrar entre intents y permitir a la plataforma lanzar el adecuado. Se pueden usar, por ejemplo, para saber si el dispositivo del cliente tiene pantalla.

Su uso no está muy documentado, pero una vez que ves como funcionan los dos métodos básicos, es trivial su uso para guardar información entre cada frase de una conversación.

//...

var context = agent.getContext('vars');

var vars = context ? context.parameters : {

ts: (new Date()).getTime() % 10000,

items: [],

pointer: 0,

list: 0,

//...

};

//...

agent.setContext({

name: 'vars',

lifespan: 1000,

'parameters': vars

});

//...

Con estas 6 capacidades humanas ya tenéis las claves para poder hacer algo similar vosotros mismos y dar mucha más funcionalidad a Google Assistant.

Espero que os resulte útil, tanto la acción en sí como la información extra. Si es así compartidlo y difundid la palabra.

Seguiremos con algunos otros sistemas que nos permiten también hacer chatbots de un modo sencillo, y con cómo integrar nuestros bots en distintas plataformas.

Bitcoin is now entering a death spiral, writes Atuyla Sarin.

Bitcoin is now entering a death spiral, writes Atuyla Sarin. Last week, the U.S. federal court ruled a case between the SEC and a crypto initial coin offering (ICO) project called Blockvest in favor of the project.

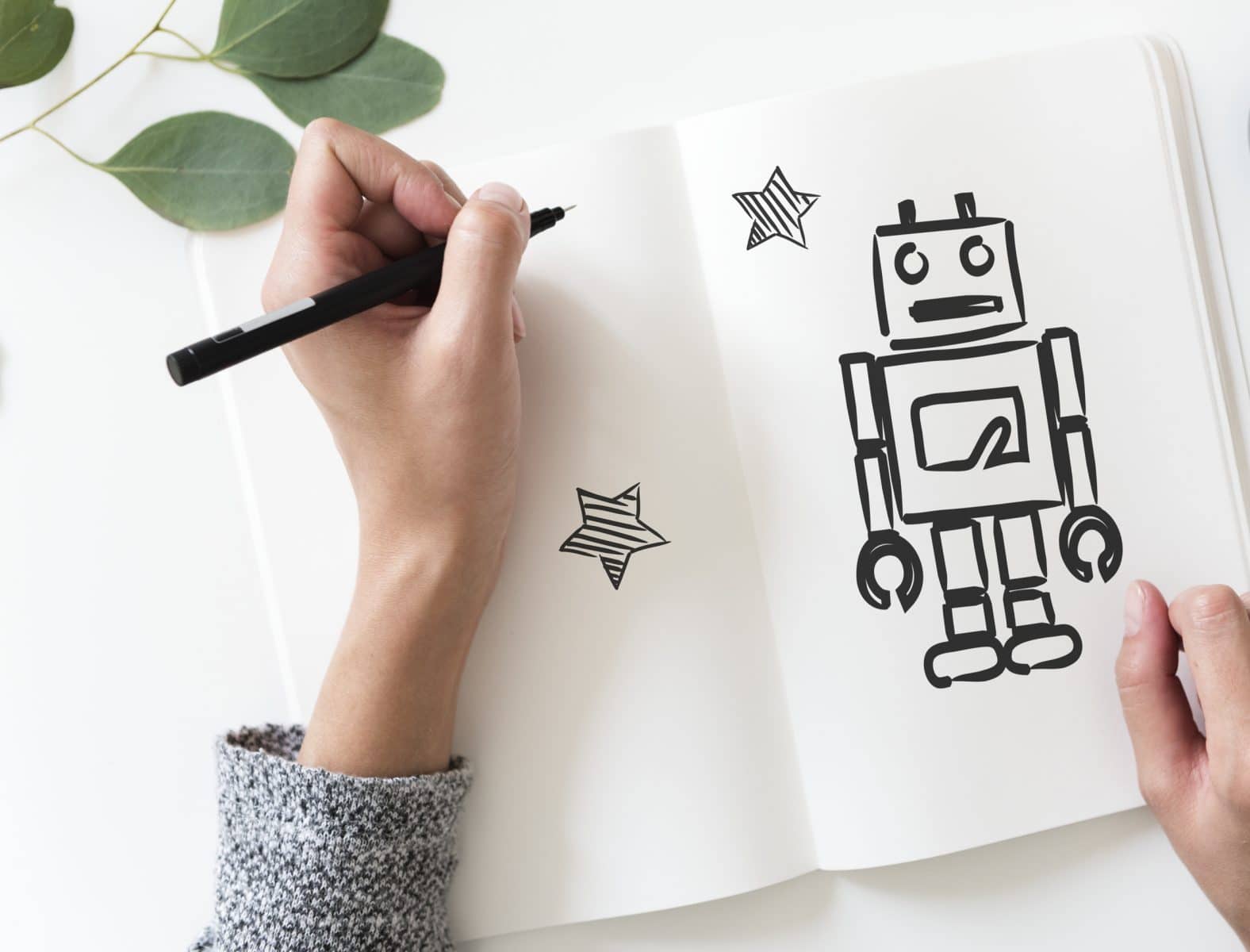

Last week, the U.S. federal court ruled a case between the SEC and a crypto initial coin offering (ICO) project called Blockvest in favor of the project. We found our hard to diagnose Python memory leak problem in numpy and numba using C/C++. It turned out that the numpy array resulting from the above operation was being passed to a numba generator compiled in «nopython» mode. This generator was not being iterated to completion, which caused the leak.

We found our hard to diagnose Python memory leak problem in numpy and numba using C/C++. It turned out that the numpy array resulting from the above operation was being passed to a numba generator compiled in «nopython» mode. This generator was not being iterated to completion, which caused the leak. When a pair of California Highway Patrol officers pulled alongside a car cruising down Highway 101 in Redwood City before dawn Friday, they reported a shocking sight: a man fast asleep behind the wheel.

When a pair of California Highway Patrol officers pulled alongside a car cruising down Highway 101 in Redwood City before dawn Friday, they reported a shocking sight: a man fast asleep behind the wheel. Monopolies are bad.

Monopolies are bad. Researchers have discovered that the so-called Rowhammer technique works on «error-correcting code» memory, in what amounts to a serious escalation.

Researchers have discovered that the so-called Rowhammer technique works on «error-correcting code» memory, in what amounts to a serious escalation.

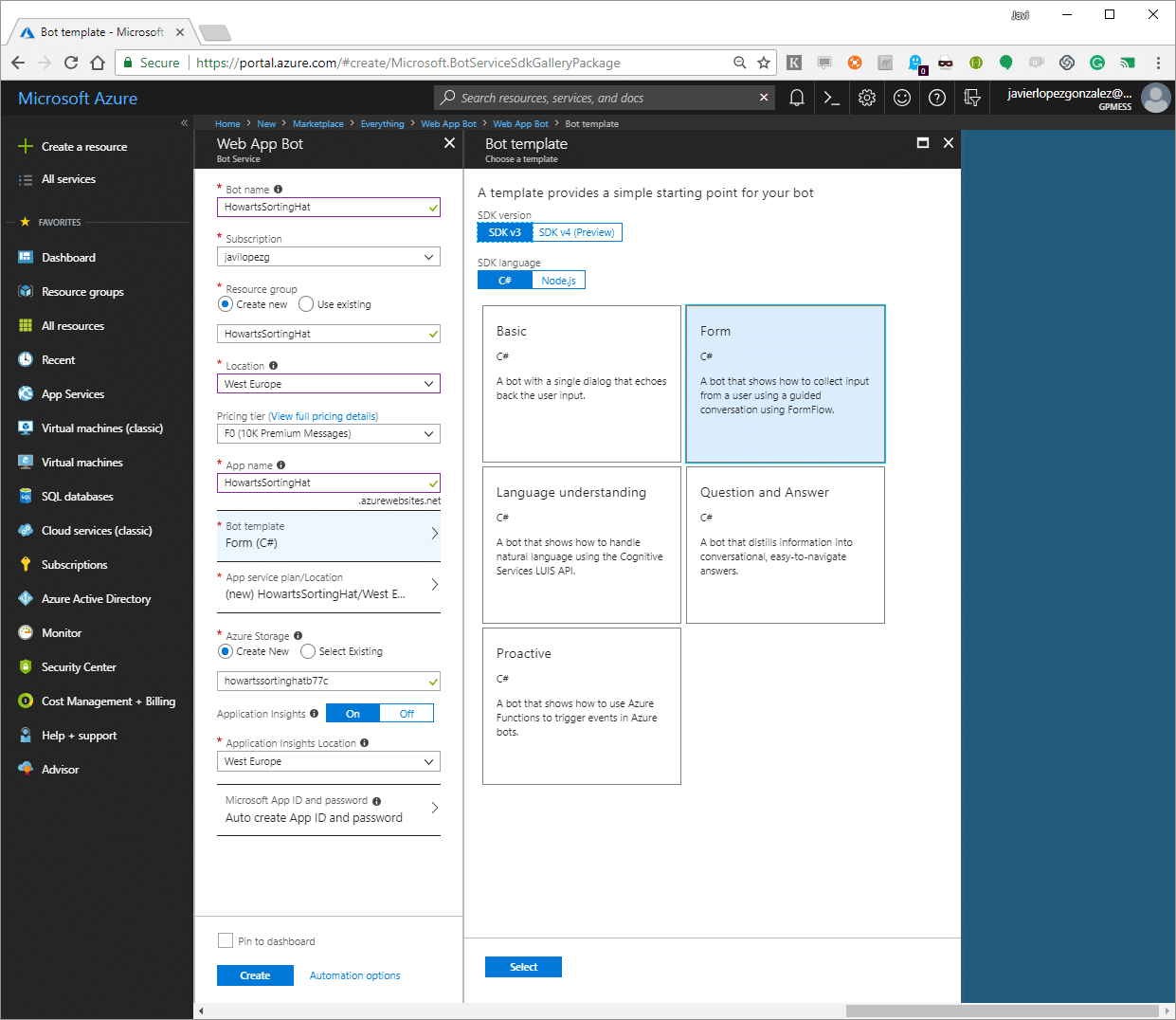

We also have to do some minor changes in the controller to allow it use our new model instead of the one we deleted. The file name is MessagesController.cs and we will change references to the model on MakeRootDialog method.

We also have to do some minor changes in the controller to allow it use our new model instead of the one we deleted. The file name is MessagesController.cs and we will change references to the model on MakeRootDialog method. When we are capturing all the data, we only have to process that and to do this we will change code inside BuildForm method of our SortingTest. If we test it again, we will see that we already have all working. However, it’s not very beautiful that if our bot is made for Spanish speakers it speaks in English. FormFlow is ready to localize it to different languages but in our case only will change some details using attributes over our model.

When we are capturing all the data, we only have to process that and to do this we will change code inside BuildForm method of our SortingTest. If we test it again, we will see that we already have all working. However, it’s not very beautiful that if our bot is made for Spanish speakers it speaks in English. FormFlow is ready to localize it to different languages but in our case only will change some details using attributes over our model.